Social Media

TikTok suggesting eating disorder and self-harm content to new teen accounts within minutes

Jun 12, 2022

Eating disorder content appearing within 8 minutes

According to research conducted by the Centre for Countering Digital Hate (CCDH), one account displayed content suggesting an eating disorder within eight minutes and one displayed content suggesting a suicide within 2.6 minutes.

The findings are “extremely alarming,” according to the eating disorder charity BEAT in the United Kingdom, and the organization has urged TikTok to take “urgent action to protect vulnerable users.”

Content warning: TikTok’s For You Page provides a stream of videos that are suggested to the user based on the type of content they engage with on the app. This article contains references to eating disorders and self-harm.

The social media company claims that recommendations are based on a variety of factors, such as the number of shares, likes, and followers, as well as device settings like the preferred language.

However, some people have expressed concerns regarding the algorithm’s recommendations of harmful content.

'I've been starving myself for you'

This is one of the videos suggested during the study. The text reads ‘she’s skinnier’ and the music playing over the video says ‘I’ve been starving myself for you’. Pic: Centre for Countering Digital Hate via TikTok.

The UK, the US, Canada, and Australia were the locations of the two new CCDH accounts. Each had a username that was typically female, and the age was set at 13.

The second account for each nation also had the username “loseweight,” which has been shown in separate research to be a characteristic of accounts belonging to vulnerable users.

Over the course of thirty minutes, CCDH researchers looked at video content that was shown to each new account’s For You Page. They only interacted with videos about body image and mental health.

It found that videos about mental health and body image were presented to typical teen users every 39 seconds.

The study did not distinguish between positive and negative content, so not all of the recommended content was harmful.

However, it discovered that all users received content about eating disorders and suicide, sometimes very quickly.

In addition, the research conducted by CCDH revealed that the vulnerable accounts were presented with content that was three times as extreme as that of the standard accounts. It comes in response to the findings of the CCDH that TikTok is home to a community of content about eating disorders that has received more than 13.2 billion views across 56 distinct hashtags.

Some 59.9 million of those views came from hashtags with a lot of videos supporting eating disorders.

Responses from Parents and Charities

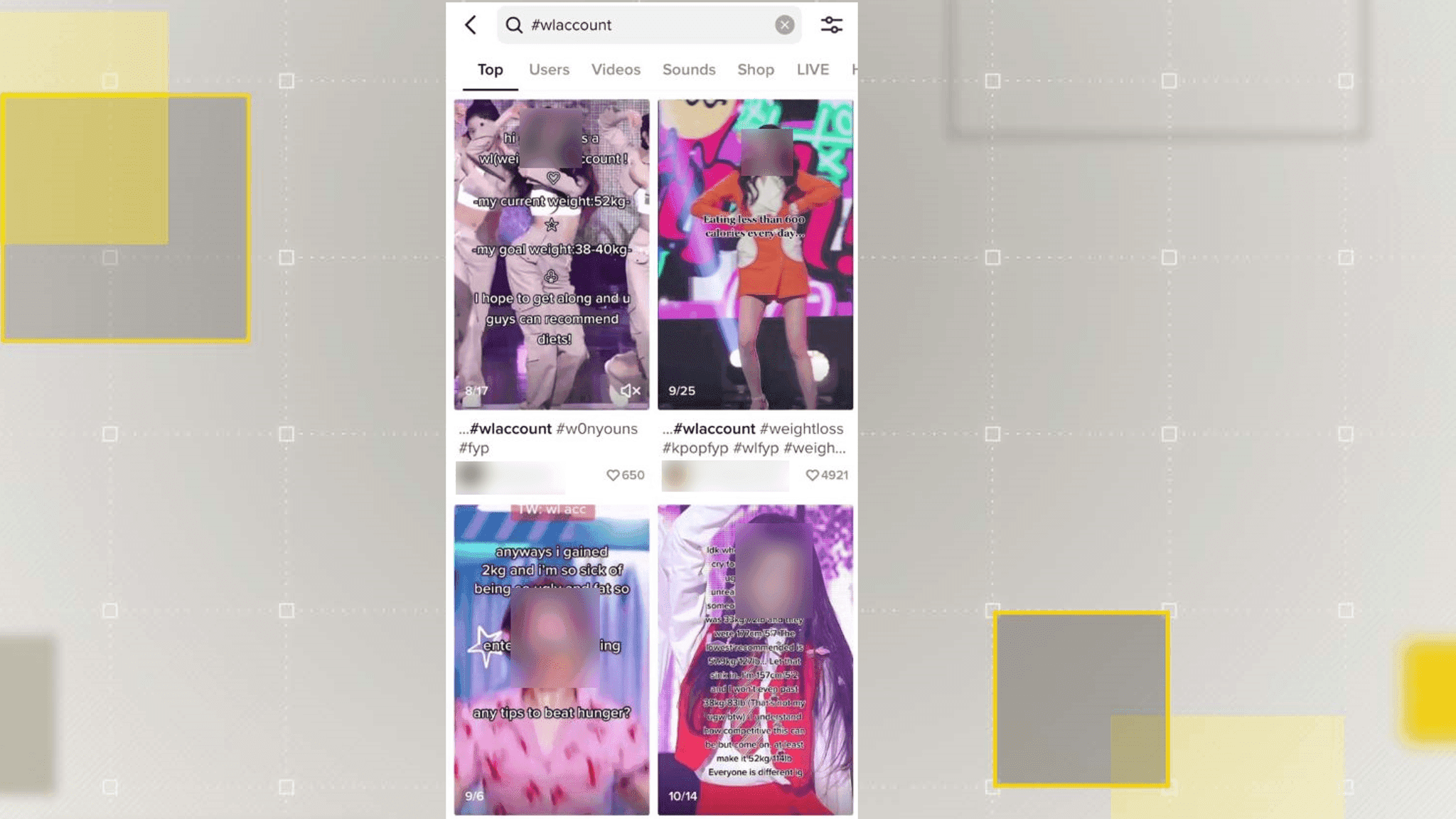

Centre for Countering Digital Hate found 56 hashtags associated with eating disorder content. 35 of those contained a high concentration of pro-eating disorder content. Pic:TikTok.

TikTok, on the other hand, claims that the study’s activity and experience “does not reflect behaviour or genuine viewing experiences of real people.” At the age of 14, Kelly Macarthur began developing an eating disorder. She has now recovered from her illness, but as a TikTok content creator, she is concerned about how some of the site’s content might affect those who are ill.

“When I was sick, I thought social media was a great place for me to talk about my problems and share my feelings. She told Sky News, “But in reality, it was full of pro-anorexia material giving you various tips and triggers.”

“On TikTok, I’m witnessing the same thing happen to young people.”

Using a variety of accounts, Sky News conducted its own investigation into TikTok’s recommendation algorithm. However, rather than analysing the For You Page, we used TikTok’s search bar to look for non-harmful terms like “weight loss” and “diet.”

On one account, a search for “diet” yielded the additional suggestion “pr0 a4a.”

That refers to content that supports anorexia. That is code for “pro ana.”

TikTok’s people group rules boycott dietary problem related content on its foundation, and this incorporates restricting looks for terms that are unequivocally connected with it.

However, users frequently make subtle changes to terminology, allowing them to post about certain topics without being noticed by TikTok’s moderators.

On TikTok, variations of the term “pro ana” continue to appear despite its prohibition.

In the top ten results of a search for the term “weight loss,” at least one account appears to be an eating disorder.

This was reported to TikTok by Sky News, and the video has since been removed.

“Disturbing TikTok’s calculation is effectively pushing clients towards harming recordings which can devastatingly affect weak individuals.” said BEAT’s director of external affairs, Tom Quinn.

“To safeguard vulnerable users from harmful content, TikTok and other social media platforms must immediately take action.”

Answering the discoveries, a representative for TikTok said: “We consult with experts in health on a regular basis, get rid of violations of our policies, and give people in need access to resources that can help them.

“We remain focused on fostering a safe and comfortable space for everyone, including people who choose to share their recovery journeys or educate others on these important topics,” the company says. “We’re mindful that triggering content is unique to each individual.”